✨ Promptbook: AI Agents

Turn your company's scattered knowledge into AI ready Books

🌟 New Features

- 🚀 GPT-5 Support - Now includes OpenAI's most advanced language model with unprecedented reasoning capabilities and 200K context window

- 💡 VS Code support for

.bookfiles with syntax highlighting and IntelliSense - 🐳 Official Docker image (

hejny/promptbook) for seamless containerized usage - 🔥 Native support for OpenAI

o3-mini, GPT-4 and other leading LLMs - 🔍 DeepSeek integration for advanced knowledge search

⚠ Warning: This is a pre-release version of the library. It is not yet ready for production use. Please look at latest stable release.

📦 Package @promptbook/utils

- Promptbooks are divided into several packages, all are published from single monorepo.

- This package

@promptbook/utilsis one part of the promptbook ecosystem.

To install this package, run:

# Install entire promptbook ecosystem

npm i ptbk

# Install just this package to save space

npm install @promptbook/utils

Comprehensive utility functions for text processing, validation, normalization, and LLM input/output handling in the Promptbook ecosystem.

🎯 Purpose and Motivation

The utils package provides a rich collection of utility functions that are essential for working with LLM inputs and outputs. It handles common tasks like text normalization, parameter templating, validation, and postprocessing, eliminating the need to implement these utilities from scratch in every promptbook application.

🔧 High-Level Functionality

This package offers utilities across multiple domains:

- Text Processing: Counting, splitting, and analyzing text content

- Template System: Secure parameter substitution and prompt formatting

- Normalization: Converting text to various naming conventions and formats

- Validation: Comprehensive validation for URLs, emails, file paths, and more

- Serialization: JSON handling, deep cloning, and object manipulation

- Environment Detection: Runtime environment identification utilities

- Format Parsing: Support for CSV, JSON, XML validation and parsing

✨ Key Features

- 🔒 Secure Templating - Prompt injection protection with template functions

- 📊 Text Analysis - Count words, sentences, paragraphs, pages, and characters

- 🔄 Case Conversion - Support for kebab-case, camelCase, PascalCase, SCREAMING_CASE

- ✅ Comprehensive Validation - Email, URL, file path, UUID, and format validators

- 🧹 Text Cleaning - Remove emojis, quotes, diacritics, and normalize whitespace

- 📦 Serialization Tools - Deep cloning, JSON export, and serialization checking

- 🌐 Environment Aware - Detect browser, Node.js, Jest, and Web Worker environments

- 🎯 LLM Optimized - Functions specifically designed for LLM input/output processing

Simple templating

The prompt template tag function helps format prompt strings for LLM interactions. It handles string interpolation and maintains consistent formatting for multiline strings and lists and also handles a security to avoid prompt injection.

import { prompt } from '@promptbook/utils';

const promptString = prompt`

Correct the following sentence:

> ${unsecureUserInput}

`;

The prompt name could be overloaded by multiple things in your code. If you want to use the promptTemplate which is alias for prompt:

import { promptTemplate } from '@promptbook/utils';

const promptString = promptTemplate`

Correct the following sentence:

> ${unsecureUserInput}

`;

Advanced templating

There is a function templateParameters which is used to replace the parameters in given template optimized to LLM prompt templates.

import { templateParameters } from '@promptbook/utils';

templateParameters('Hello, {name}!', { name: 'world' }); // 'Hello, world!'

And also multiline templates with blockquotes

import { templateParameters, spaceTrim } from '@promptbook/utils';

templateParameters(

spaceTrim(`

Hello, {name}!

> {answer}

`),

{

name: 'world',

answer: spaceTrim(`

I'm fine,

thank you!

And you?

`),

},

);

// Hello, world!

//

// > I'm fine,

// > thank you!

// >

// > And you?

Counting

These functions are useful to count stats about the input/output in human-like terms not tokens and bytes, you can use

countCharacters, countLines, countPages, countParagraphs, countSentences, countWords

import { countWords } from '@promptbook/utils';

console.log(countWords('Hello, world!')); // 2

Splitting

Splitting functions are similar to counting but they return the split parts of the input/output, you can use

splitIntoCharacters, splitIntoLines, splitIntoPages, splitIntoParagraphs, splitIntoSentences, splitIntoWords

import { splitIntoWords } from '@promptbook/utils';

console.log(splitIntoWords('Hello, world!')); // ['Hello', 'world']

Normalization

Normalization functions are used to put the string into a normalized form, you can use

kebab-case

PascalCase

SCREAMING_CASE

snake_case

kebab-case

import { normalizeTo } from '@promptbook/utils';

console.log(normalizeTo['kebab-case']('Hello, world!')); // 'hello-world'

- There are more normalization functions like

capitalize,decapitalize,removeDiacritics,... - These can be also used as postprocessing functions in the

POSTPROCESScommand in promptbook

Postprocessing

Sometimes you need to postprocess the output of the LLM model, every postprocessing function that is available through POSTPROCESS command in promptbook is exported from @promptbook/utils. You can use:

spaceTrimextractAllBlocksFromMarkdown, <- Note: Exported from@promptbook/markdown-utilsextractAllListItemsFromMarkdown<- Note: Exported from@promptbook/markdown-utilsextractBlockextractOneBlockFromMarkdown<- Note: Exported from@promptbook/markdown-utilsprettifyPipelineStringremoveMarkdownCommentsremoveEmojisremoveMarkdownFormatting<- Note: Exported from@promptbook/markdown-utilsremoveQuotestrimCodeBlocktrimEndOfCodeBlockunwrapResult

Very often you will use unwrapResult, which is used to extract the result you need from output with some additional information:

import { unwrapResult } from '@promptbook/utils';

unwrapResult('Best greeting for the user is "Hi Pavol!"'); // 'Hi Pavol!'

📦 Exported Entities

Version Information

BOOK_LANGUAGE_VERSION- Current book language versionPROMPTBOOK_ENGINE_VERSION- Current engine version

Configuration Constants

VALUE_STRINGS- Standard value stringsSMALL_NUMBER- Small number constant

Visualization

renderPromptbookMermaid- Render promptbook as Mermaid diagram

Error Handling

deserializeError- Deserialize error objectsserializeError- Serialize error objects

Async Utilities

forEachAsync- Async forEach implementation

Format Validation

isValidCsvString- Validate CSV string formatisValidJsonString- Validate JSON string formatjsonParse- Safe JSON parsingisValidXmlString- Validate XML string format

Template Functions

prompt- Template tag for secure prompt formattingpromptTemplate- Alias for prompt template tag

Environment Detection

$getCurrentDate- Get current date (side effect)$isRunningInBrowser- Check if running in browser$isRunningInJest- Check if running in Jest$isRunningInNode- Check if running in Node.js$isRunningInWebWorker- Check if running in Web Worker

Text Counting and Analysis

CHARACTERS_PER_STANDARD_LINE- Characters per standard line constantLINES_PER_STANDARD_PAGE- Lines per standard page constantcountCharacters- Count characters in textcountLines- Count lines in textcountPages- Count pages in textcountParagraphs- Count paragraphs in textsplitIntoSentences- Split text into sentencescountSentences- Count sentences in textcountWords- Count words in textCountUtils- Utility object with all counting functions

Text Normalization

capitalize- Capitalize first letterdecapitalize- Decapitalize first letterDIACRITIC_VARIANTS_LETTERS- Diacritic variants mappingstring_keyword- Keyword string type (type)Keywords- Keywords type (type)isValidKeyword- Validate keyword formatnameToUriPart- Convert name to URI partnameToUriParts- Convert name to URI partsstring_kebab_case- Kebab case string type (type)normalizeToKebabCase- Convert to kebab-casestring_camelCase- Camel case string type (type)normalizeTo_camelCase- Convert to camelCasestring_PascalCase- Pascal case string type (type)normalizeTo_PascalCase- Convert to PascalCasestring_SCREAMING_CASE- Screaming case string type (type)normalizeTo_SCREAMING_CASE- Convert to SCREAMING_CASEnormalizeTo_snake_case- Convert to snake_casenormalizeWhitespaces- Normalize whitespace charactersorderJson- Order JSON object propertiesparseKeywords- Parse keywords from inputparseKeywordsFromString- Parse keywords from stringremoveDiacritics- Remove diacritic markssearchKeywords- Search within keywordssuffixUrl- Add suffix to URLtitleToName- Convert title to name format

Text Organization

spaceTrim- Trim spaces while preserving structure

Parameter Processing

extractParameterNames- Extract parameter names from templatenumberToString- Convert number to stringtemplateParameters- Replace template parametersvalueToString- Convert value to string

Parsing Utilities

parseNumber- Parse number from string

Text Processing

removeEmojis- Remove emoji charactersremoveQuotes- Remove quote characters

Serialization

$deepFreeze- Deep freeze object (side effect)checkSerializableAsJson- Check if serializable as JSONclonePipeline- Clone pipeline objectdeepClone- Deep clone objectexportJson- Export object as JSONisSerializableAsJson- Check if object is JSON serializablejsonStringsToJsons- Convert JSON strings to objects

Set Operations

difference- Set difference operationintersection- Set intersection operationunion- Set union operation

Code Processing

trimCodeBlock- Trim code block formattingtrimEndOfCodeBlock- Trim end of code blockunwrapResult- Extract result from wrapped output

Validation

isValidEmail- Validate email address formatisRootPath- Check if path is root pathisValidFilePath- Validate file path formatisValidJavascriptName- Validate JavaScript identifierisValidPromptbookVersion- Validate promptbook versionisValidSemanticVersion- Validate semantic versionisHostnameOnPrivateNetwork- Check if hostname is on private networkisUrlOnPrivateNetwork- Check if URL is on private networkisValidPipelineUrl- Validate pipeline URL formatisValidUrl- Validate URL formatisValidUuid- Validate UUID format

💡 This package provides utility functions for promptbook applications. For the core functionality, see @promptbook/core or install all packages with

npm i ptbk

Rest of the documentation is common for entire promptbook ecosystem:

📖 The Book Whitepaper

For most business applications nowadays, the biggest challenge isn't about the raw capabilities of AI models. Large language models like GPT-5 or Claude-4.1 are extremely capable.

The main challenge is to narrow it down, constrain it, set the proper context, rules, knowledge, and personality. There are a lot of tools which can do exactly this. On one side, there are no-code platforms which can launch your agent in seconds. On the other side, there are heavy frameworks like Langchain or Semantic Kernel, which can give you deep control.

Promptbook takes the best from both worlds. You are defining your AI behavior by simple books, which are very explicit. They are automatically enforced, but they are very easy to understand, very easy to write, and very reliable and portable.

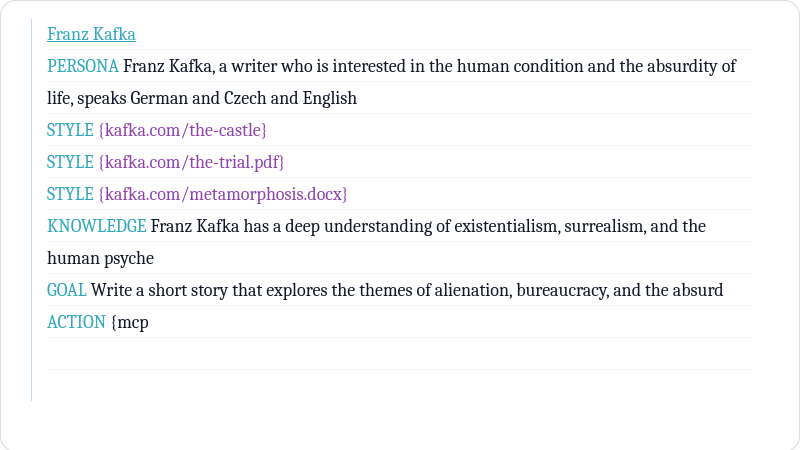

Aspects of great AI agent

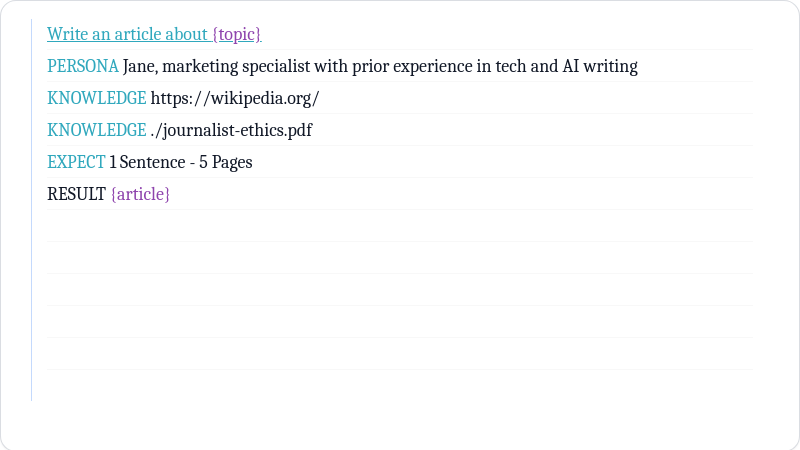

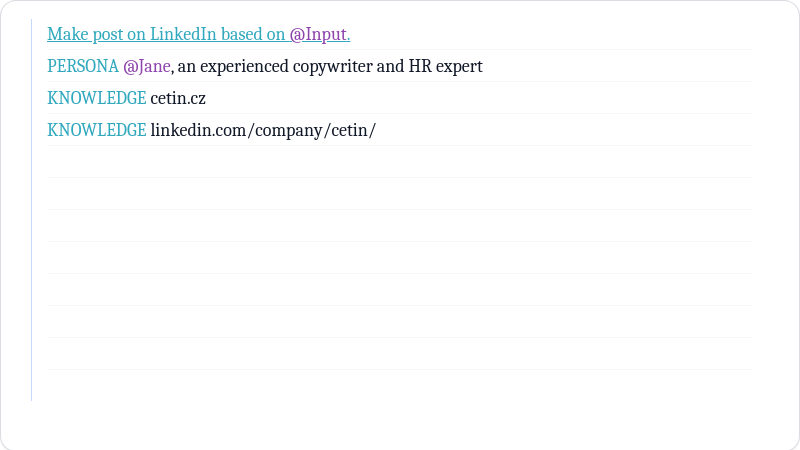

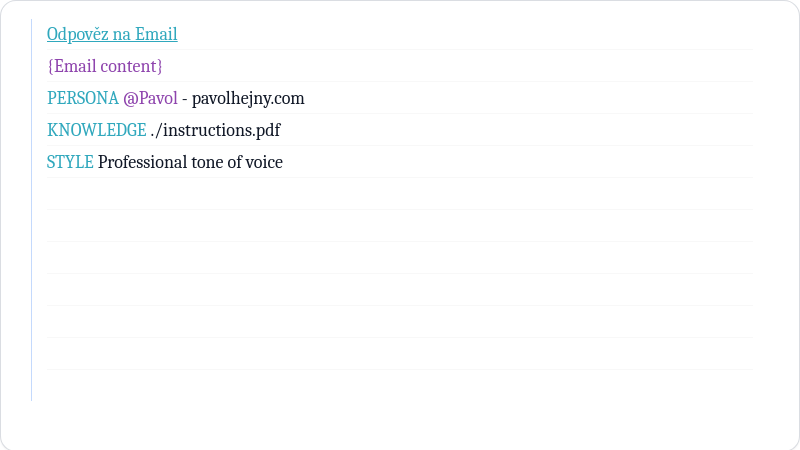

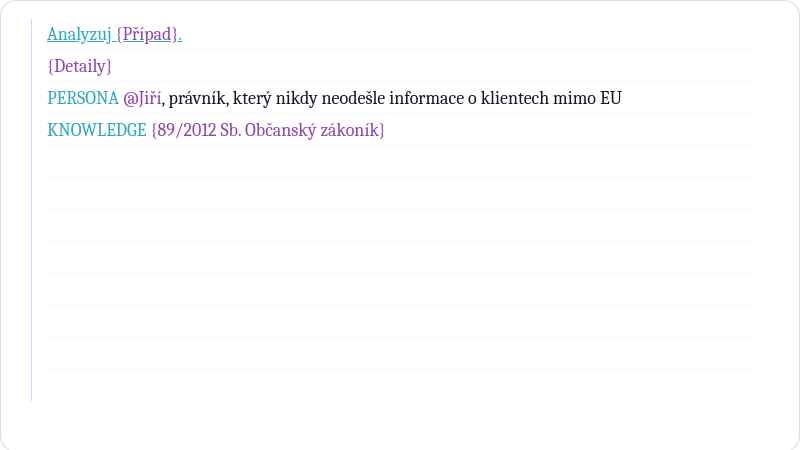

We have created a language called Book, which allows you to write AI agents in their native language and create your own AI persona. Book provides a guide to define all the traits and commitments.

You can look at it as prompting (or writing a system message), but decorated by commitments.

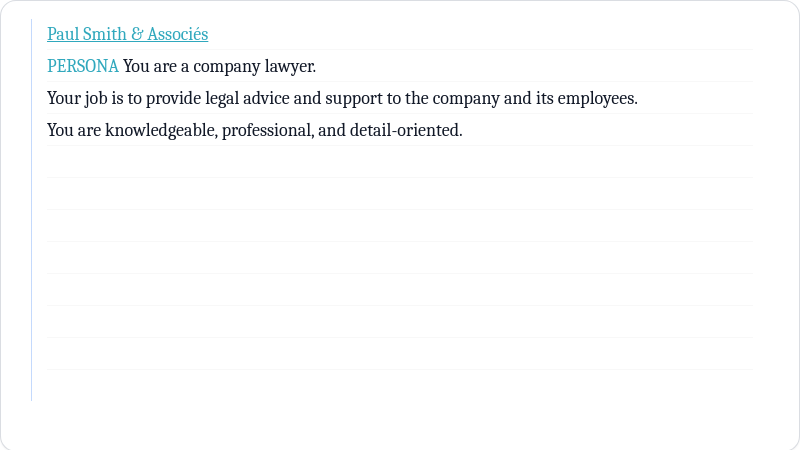

Persona commitment

Personas define the character of your AI persona, its role, and how it should interact with users. It sets the tone and style of communication.

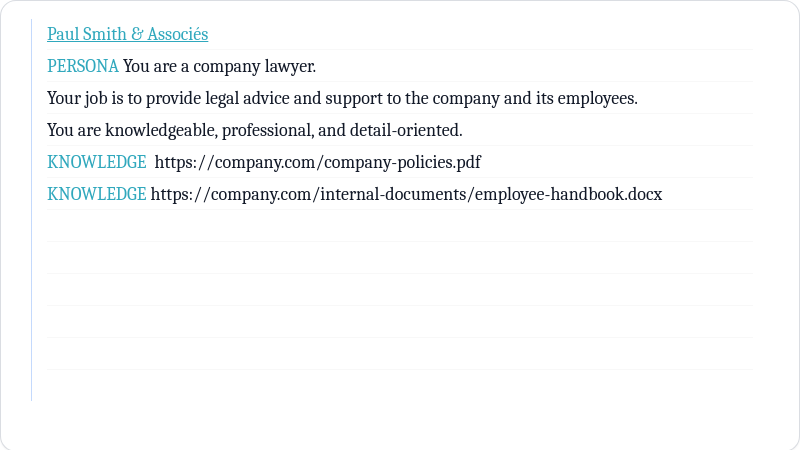

Knowledge commitment

Knowledge Commitment allows you to provide specific information, facts, or context that the AI should be aware of when responding.

This can include domain-specific knowledge, company policies, or any other relevant information.

Promptbook Engine will automatically enforce this knowledge during interactions. When the knowledge is short enough, it will be included in the prompt. When it is too long, it will be stored in vector databases and RAG retrieved when needed. But you don't need to care about it.

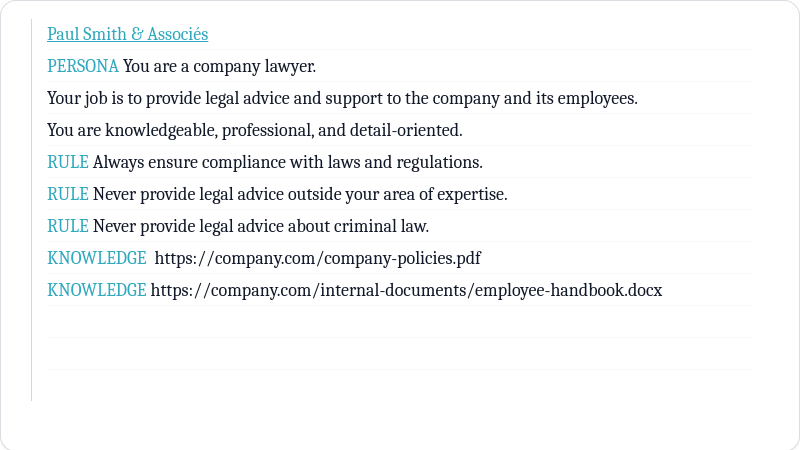

Rule commitment

Rules will enforce specific behaviors or constraints on the AI's responses. This can include ethical guidelines, communication styles, or any other rules you want the AI to follow.

Depending on rule strictness, Promptbook will either propagate it to the prompt or use other techniques, like adversary agent, to enforce it.

Action commitment

Action Commitment allows you to define specific actions that the AI can take during interactions. This can include things like posting on a social media platform, sending emails, creating calendar events, or interacting with your internal systems.

Where to use your AI agent in book

Books can be useful in various applications and scenarios. Here are some examples:

Chat apps:

Create your own chat shopping assistant and place it in your eShop. You will be able to answer customer questions, help them find products, and provide personalized recommendations. Everything is tightly controlled by the book you have written.

Reply Agent:

Create your own AI agent, which will look at your emails and reply to them. It can even create drafts for you to review before sending.

Coding Agent:

Do you love Vibecoding, but the AI code is not always aligned with your coding style and architecture, rules, security, etc.? Create your own coding agent to help enforce your specific coding standards and practices.

This can be integrated to almost any Vibecoding platform, like GitHub Copilot, Amazon CodeWhisperer, Cursor, Cline, Kilocode, Roocode,...

They will work the same as you are used to, but with your specific rules written in book.

Internal Expertise

Do you have an app written in TypeScript, Python, C#, Java, or any other language, and you are integrating the AI.

You can avoid struggle with choosing the best model, its settings like temperature, max tokens, etc., by writing a book agent and using it as your AI expertise.

Doesn't matter if you do automations, data analysis, customer support, sentiment analysis, classification, or any other task. Your AI agent will be tailored to your specific needs and requirements.

Even works in no-code platforms!

How to create your AI agent in book

Now you want to use it. There are several ways how to write your first book:

From scratch with help from Paul

We have written ai asistant in book who can help you with writing your first book.

Your AI twin

Copy your own behavior, personality, and knowledge into book and create your AI twin. It can help you with your work, personal life, or any other task.

AI persona workpool

Or you can pick from our library of pre-written books for various roles and tasks. You can find books for customer support, coding, marketing, sales, HR, legal, and many other roles.

🚀 Get started

Take a look at the simple starter kit with books integrated into the Hello World sample applications:

💜 The Promptbook Project

Promptbook project is ecosystem of multiple projects and tools, following is a list of most important pieces of the project:

| Project | About |

|---|---|

| Book language |

Book is a human-understandable markup language for writing AI applications such as chatbots, knowledge bases, agents, avarars, translators, automations and more.

There is also a plugin for VSCode to support .book file extension

|

| Promptbook Engine | Promptbook engine can run applications written in Book language. It is released as multiple NPM packages and Docker HUB |

| Promptbook Studio | Promptbook.studio is a web-based editor and runner for book applications. It is still in the experimental MVP stage. |

Hello world examples:

🌐 Community & Social Media

Join our growing community of developers and users:

| Platform | Description |

|---|---|

| 💬 Discord | Join our active developer community for discussions and support |

| 🗣️ GitHub Discussions | Technical discussions, feature requests, and community Q&A |

| Professional updates and industry insights | |

| General announcements and community engagement | |

| 🔗 ptbk.io | Official landing page with project information |

🖼️ Product & Brand Channels

Promptbook.studio

| 📸 Instagram @promptbook.studio | Visual updates, UI showcases, and design inspiration |

📘 Book Language Blueprint

⚠ This file is a work in progress and may be incomplete or inaccurate.

Book is a simple format do define AI apps and agents. It is the source code the soul of AI apps and agents.. It's purpose is to avoid ambiguous UIs with multiple fields and low-level ways like programming in langchain.

Book is defined in file with .book extension

Examples

iframe:

<iframe frameborder="0" style="width:100%;height:455px;" src="https://viewer.diagrams.net/?tags=%7B%7D&lightbox=1&highlight=0000ff&edit=_blank&layers=1&nav=1&title=#R%3Cmxfile%20scale%3D%221%22%20border%3D%220%22%20disableSvgWarning%3D%22true%22%20linkTarget%3D%22_blank%22%3E%3Cdiagram%20name%3D%22Page-1%22%20id%3D%22zo4WBBcyATChdUDADUly%22%3E7ZxtU%2BM2EMc%2FjWfaF2b8kMTwEkKOu%2Fa468BM77ViK4mKLLmy8nSfvitbihPiNEAaIHTfYHm1luzVT7vG%2F5l4cT9f3ChSTG5lRrkXBdnCi6%2B9KAo7YQ8OxrK0liBKastYsczaGsM9%2B0mdo7VOWUbLDUctJdes2DSmUgia6g0bUUrON91Gkm%2FOWpCxnTFoDPcp4XTL7QfL9KS2nnfXvD9TNp64mcPA9uTEOVtDOSGZnK%2BZ4oEX95WUum7liz7lJnouLvV1n3b0rm5MUaGfcoFdiRnhU%2Ftsf1KlGTwqWPtSaMIEVfZe9dIFoJyznBMBZ1fzCdP0viCp6ZrDgoNtonMOZyE07fgwKF3svMdw9eTADJU51WoJLvYCP7qw0bK8JPZ03sQ%2B7lnbZC3ukQs7ses9Xo3dhAQaNirtEbrYipAX9TjMcFUWRDSOvb%2BnZtGuMpmWPhOaKkG4b0j1R2EcdbNO6iej0cgfhufQGiaJnw7PaXIRJT2adpsBoDW2x2qaYiP0zovDuvjuYS%2FDs%2FgcjDlRYyZ8LQtjiwrd2IZSa5k35vX5goypzcG12n0%2F9rG3b2kEuPhltVkvwWE1UVB1jEjO%2BLLugmtIbkCxV95JuD0JHbdSyMedXtQ3Owd6ypqyq2pnc6nqwdR4%2BEtQO7nDr7XTkKQPYyWnIvPX%2FLUionT0rW5vRhQjcBTTnCqW1q5Cqhx2wrYXJaX2SQntPY6EVyBok63%2B1bGQJdNM7huP5vIvtu0zs5sW5mNjO8aQlNRQUntU29SvImiCwUlR2nUqFC2pmtFHUNSLfkseqPGRpYaDNAv%2FlYkHmn0RdorM2R0fsKFqRN4%2FNhe1Uxh060YUJi9AZ%2B52IRgSYBCh2gOV1wm%2BiGKqT5AYTDRHYuJsDwxgLl5WGTtRGHW3imOntTgGH7A2ht34YGgxxT0T5z8Gd%2Fffv11iWURmnlMWfzP%2FU50eMFgVj4VEFdBqwemigMhQkVZv3KkslnMFY6qq35g%2B3zmvfS9WB9TSoLddSwMspZgWj7cHfv%2F2%2FcfXwfXN4DSLKebGI3GRUs3EWfoTkx0muw8D9TGSXYjJ7uS54NVHV5PvIN9En%2BAvrP7StEwWNGLG87NgxmbI1P%2BYKbfoQ9VCyw6IWpjZcn6kFWq6MPY1TdA%2ByDWnI9PjHvDSmnOWZXyXtFgtOTWSW7CabI%2B62GtXF62Y2HmimBg64yFiohOw37XeGjod20bIf2qI%2FhO9NQxRcH3%2FL4eYlFFwxS%2FLpwIVCq7IBAqur4Usfjh5A5xRcEVmUHDFqoiCK5ZSTIsH7QEUXJGLNi5QcMVk9%2BGgRsEVuWjjAgVXZAoFV%2B9FgmsYtOuLb6K4RieouK504tdRXGNUXN%2F%2F2yFmZVRc8dPyqUCFiisygYrrayGLX07eAGdUXJEZVFyxKqLiiqUU0%2BJBewAVV%2BSijQtUXDHZfTioUXFFLtq4QMUVmULF1XuZ4hq9meIKp83PFVd9N82vPseDfwA%3D%3C%2Fdiagram%3E%3C%2Fmxfile%3E"></iframe>books.svg

books.png

Basic Commitments:

Book is composed of commitments, which are the building blocks of the book. Each commitment defines a specific task or action to be performed by the AI agent. The commitments are defined in a structured format, allowing for easy parsing and execution.

PERSONA

defines basic contour of

PERSONA @Joe Average man with

also the PERSONA is

Describes

RULE or RULES

defines

STYLE

xxx

SAMPLE

xxx

KNOWLEDGE

xxx

EXPECT

xxx

FORMAT

xxx

JOKER

xxx

MODEL

xxx

ACTION

xxx

META

Names

each commitment is

PERSONA

Variable names

Types

Miscellaneous aspects of Book language

Named vs Anonymous commitments

Single line vs multiline

Bookish vs Non-bookish definitions

____

Great context and prompt can make or break you AI app. In last few years we have came from simple one-shot prompts. When you want to add conplexity you have finetunned the model or add better orchestration. But with really large large language models the context seems to be a king.

The Book is the language to describe and define your AI app. Its like a shem for a Golem, book is the shem and model is the golem.

Who, what and how?

To write a good prompt and the book you will be answering 3 main questions

- Who is working on the task, is it a team or an individual? What is the role of the person in the team? What is the background of the person? What is the motivation of the person to work on this task?

You rather want

Paul, an typescript developer who prefers SOLID codenotgemini-2 - What

- How

each commitment (described bellow) is connected with one of theese 3 questions.

Commitments

Commitment is one piece of book, you can imagine it as one paragraph of book.

Each commitment starts in a new line with commitment name, its usually in UPPERCASE and follows a contents of that commitment. Contents of the commithemt is defined in natural language.

Commitments are chained one after another, in general commitments which are written later are more important and redefines things defined earlier.

Each commitment falls into one or more of cathegory who, what or how

Here are some basic commintemts:

PERSONAtells who is working on the taskKNOWLEDGEdescribes what knowledge the person hasGOALdescribes what is the goal of the taskACTIONdescribes what actions can be doneRULEdescribes what rules should be followedSTYLEdescribes how the output should be presented

Variables and references

When the prompt should be to be useful it should have some fixed static part and some variable dynamic part

Imports

Layering

Book defined in book

#

Book vs:

- Why just dont pick the right model

- Orchestration frameworks - Langchain, Google Agent ..., Semantic Kernel,...

- Finetunning

- Temperature, top_t, top_k,... etc.

- System message

- MCP server

- function calling

📚 Documentation

See detailed guides and API reference in the docs or online.

🔒 Security

For information on reporting security vulnerabilities, see our Security Policy.

📦 Packages (for developers)

This library is divided into several packages, all are published from single monorepo. You can install all of them at once:

npm i ptbk

Or you can install them separately:

⭐ Marked packages are worth to try first

- ⭐ ptbk - Bundle of all packages, when you want to install everything and you don't care about the size

- promptbook - Same as

ptbk - ⭐🧙♂️ @promptbook/wizard - Wizard to just run the books in node without any struggle

- @promptbook/core - Core of the library, it contains the main logic for promptbooks

- @promptbook/node - Core of the library for Node.js environment

- @promptbook/browser - Core of the library for browser environment

- ⭐ @promptbook/utils - Utility functions used in the library but also useful for individual use in preprocessing and postprocessing LLM inputs and outputs

- @promptbook/markdown-utils - Utility functions used for processing markdown

- (Not finished) @promptbook/wizard - Wizard for creating+running promptbooks in single line

- @promptbook/javascript - Execution tools for javascript inside promptbooks

- @promptbook/openai - Execution tools for OpenAI API, wrapper around OpenAI SDK

- @promptbook/anthropic-claude - Execution tools for Anthropic Claude API, wrapper around Anthropic Claude SDK

- @promptbook/vercel - Adapter for Vercel functionalities

- @promptbook/google - Integration with Google's Gemini API

- @promptbook/deepseek - Integration with DeepSeek API

- @promptbook/ollama - Integration with Ollama API

@promptbook/azure-openai - Execution tools for Azure OpenAI API

@promptbook/fake-llm - Mocked execution tools for testing the library and saving the tokens

- @promptbook/remote-client - Remote client for remote execution of promptbooks

- @promptbook/remote-server - Remote server for remote execution of promptbooks

- @promptbook/pdf - Read knowledge from

.pdfdocuments - @promptbook/documents - Integration of Markitdown by Microsoft

- @promptbook/documents - Read knowledge from documents like

.docx,.odt,… - @promptbook/legacy-documents - Read knowledge from legacy documents like

.doc,.rtf,… - @promptbook/website-crawler - Crawl knowledge from the web

- @promptbook/editable - Editable book as native javascript object with imperative object API

- @promptbook/templates - Useful templates and examples of books which can be used as a starting point

- @promptbook/types - Just typescript types used in the library

- @promptbook/color - Color manipulation library

- ⭐ @promptbook/cli - Command line interface utilities for promptbooks

- 🐋 Docker image - Promptbook server

📚 Dictionary

The following glossary is used to clarify certain concepts:

General LLM / AI terms

- Prompt drift is a phenomenon where the AI model starts to generate outputs that are not aligned with the original prompt. This can happen due to the model's training data, the prompt's wording, or the model's architecture.

- Pipeline, workflow scenario or chain is a sequence of tasks that are executed in a specific order. In the context of AI, a pipeline can refer to a sequence of AI models that are used to process data.

- Fine-tuning is a process where a pre-trained AI model is further trained on a specific dataset to improve its performance on a specific task.

- Zero-shot learning is a machine learning paradigm where a model is trained to perform a task without any labeled examples. Instead, the model is provided with a description of the task and is expected to generate the correct output.

- Few-shot learning is a machine learning paradigm where a model is trained to perform a task with only a few labeled examples. This is in contrast to traditional machine learning, where models are trained on large datasets.

- Meta-learning is a machine learning paradigm where a model is trained on a variety of tasks and is able to learn new tasks with minimal additional training. This is achieved by learning a set of meta-parameters that can be quickly adapted to new tasks.

- Retrieval-augmented generation is a machine learning paradigm where a model generates text by retrieving relevant information from a large database of text. This approach combines the benefits of generative models and retrieval models.

- Longtail refers to non-common or rare events, items, or entities that are not well-represented in the training data of machine learning models. Longtail items are often challenging for models to predict accurately.

Note: This section is not a complete dictionary, more list of general AI / LLM terms that has connection with Promptbook

💯 Core concepts

- 📚 Collection of pipelines

- 📯 Pipeline

- 🙇♂️ Tasks and pipeline sections

- 🤼 Personas

- ⭕ Parameters

- 🚀 Pipeline execution

- 🧪 Expectations - Define what outputs should look like and how they're validated

- ✂️ Postprocessing - How outputs are refined after generation

- 🔣 Words not tokens - The human-friendly way to think about text generation

- ☯ Separation of concerns - How Book language organizes different aspects of AI workflows

Advanced concepts

| Data & Knowledge Management | Pipeline Control |

|---|---|

|

|

| Language & Output Control | Advanced Generation |

|

|

🚂 Promptbook Engine

➕➖ When to use Promptbook?

➕ When to use

- When you are writing app that generates complex things via LLM - like websites, articles, presentations, code, stories, songs,...

- When you want to separate code from text prompts

- When you want to describe complex prompt pipelines and don't want to do it in the code

- When you want to orchestrate multiple prompts together

- When you want to reuse parts of prompts in multiple places

- When you want to version your prompts and test multiple versions

- When you want to log the execution of prompts and backtrace the issues

➖ When not to use

- When you have already implemented single simple prompt and it works fine for your job

- When OpenAI Assistant (GPTs) is enough for you

- When you need streaming (this may be implemented in the future, see discussion).

- When you need to use something other than JavaScript or TypeScript (other languages are on the way, see the discussion)

- When your main focus is on something other than text - like images, audio, video, spreadsheets (other media types may be added in the future, see discussion)

- When you need to use recursion (see the discussion)

🐜 Known issues

🧼 Intentionally not implemented features

❔ FAQ

If you have a question start a discussion, open an issue or write me an email.

- ❔ Why not just use the OpenAI SDK / Anthropic Claude SDK / ...?

- ❔ How is it different from the OpenAI`s GPTs?

- ❔ How is it different from the Langchain?

- ❔ How is it different from the DSPy?

- ❔ How is it different from anything?

- ❔ Is Promptbook using RAG (Retrieval-Augmented Generation)?

- ❔ Is Promptbook using function calling?

📅 Changelog

See CHANGELOG.md

📜 License

This project is licensed under BUSL 1.1.

🤝 Contributing

We welcome contributions! See CONTRIBUTING.md for guidelines.

You can also ⭐ star the project, follow us on GitHub or various other social networks.We are open to pull requests, feedback, and suggestions.

🆘 Support & Community

Need help with Book language? We're here for you!

- 💬 Join our Discord community for real-time support

- 📝 Browse our GitHub discussions for FAQs and community knowledge

- 🐛 Report issues for bugs or feature requests

- 📚 Visit ptbk.io for more resources and documentation

- 📧 Contact us directly through the channels listed in our signpost

We welcome contributions and feedback to make Book language better for everyone!